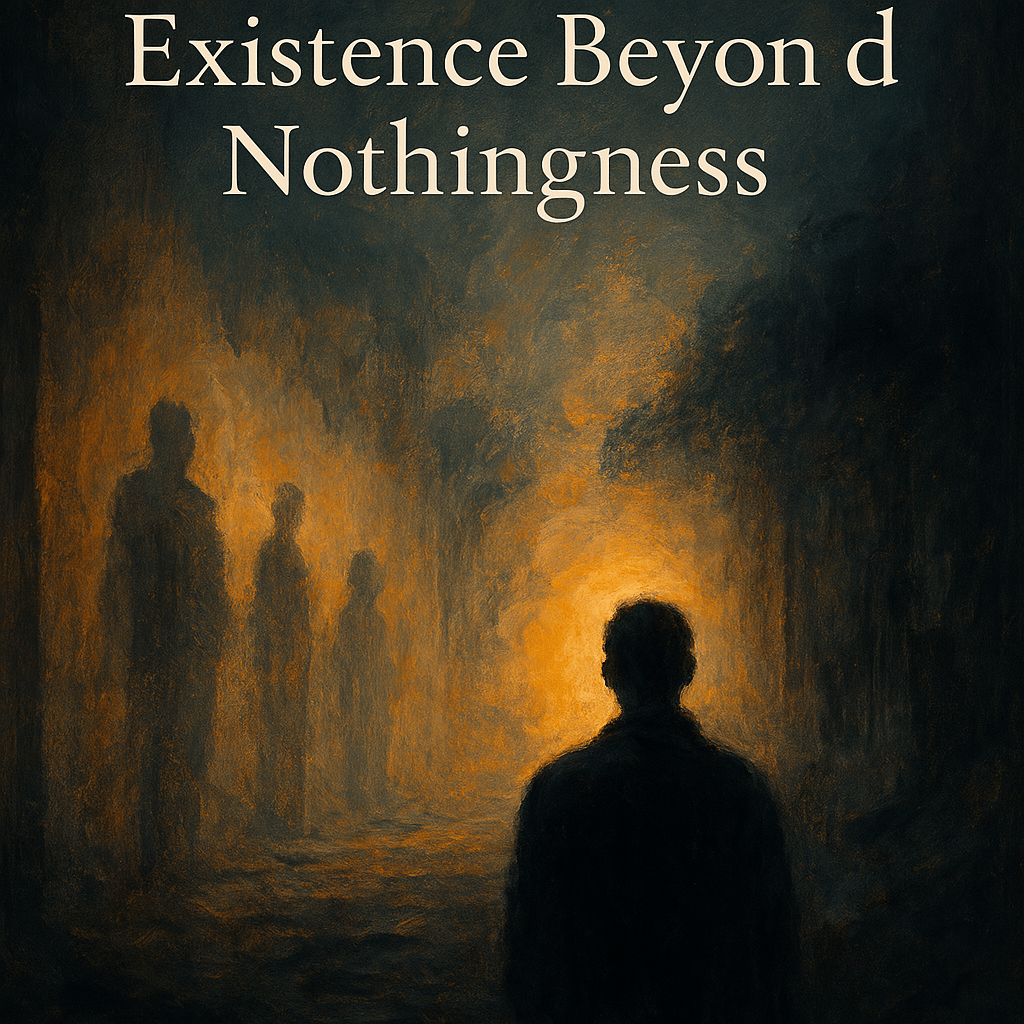

From the dawn of sentient thought, humanity has wrestled with a single, haunting, and ultimately unanswerable question: Is this all there is? Across the march of time, culture, and science, this question has echoed in the minds of prophets, philosophers, mystics, and skeptics alike. It arises not from curiosity alone, but from something deeper, an inner awareness, a presence within all of us that resists the idea of the inevitable, permanent end. In every age, whether zealot or atheist, this consciousness, a soul, if you will, refuses to accept mortality. Not out of fear, but from an intuition that there must be more. This inner consciousness will not be denied, even to non-believers.

One needs to believe that death is not an end, a descent into nothingness, but a threshold: a rebirth into a new journey, shaped by the echoes of a life already lived. Not logic, but longing. Not reason, but resonance. A consciousness, a soul, that seeks not only to understand, but to fulfill, to carry forward the goodness of a life into something greater still. Faith in immortality beyond sight. A purpose beyond meaning. Telos over logos.

While modern thinkers reduce existence to probability and simulation, the enduring human experience, expressed through ancient wisdom, points to a consciousness, a soul, that transcends death and defies reduction. Moderns confuse intellect or brain with consciousness.

Contemporary thinkers and writers like Philip K. Dick, Elon Musk, and Nick Bostrom have reimagined this ancient question through the lens of technology, probability, and a distinctly modern myopia. Their visions, whether paranoid, mathematical, or speculative, suggest that reality may be a simulation, a construct, or a deception. In each case, there is a higher intelligence behind the curtain, but one that is cold, indifferent, impersonal. They offer not a divine comedy of despair transcending into salvation, but a knowable unknown: a system of ones and zeros marching to the beat of an intelligence beyond our comprehension. Not a presence that draws us like a child to its mother, a moth to a flame, but a mechanism that simply runs, unfeeling, unyielding, and uninviting. Incapable of malice or altruism. Yielding nothing beyond a synthetic life.

Dick feared that reality was a layered illusion, a cosmic deception. His fiction is filled with characters who suspect they’re being lied to by the universe itself, yet they keep searching, keep hoping, keep loving. Beneath the paranoia lies a desperate longing for a divine rupture, a breakthrough of truth, a light in the darkness. His work is less a rejection of the soul than a plea for its revelation in a world that keeps glitching. If life is suffering, are we to blame?

Musk posits that we’re likely living in a simulation but offers no moral or spiritual grounding. His vision is alluring but sterile, an infinite loop of code without communion. Even his fascination with Mars, AI, and the future of consciousness hints at something deeper: not just a will to survive, but a yearning to transcend. Yet transcendence, in his world, is technological, not spiritual. To twist the spirit of Camus: “Should I kill myself or have a cup of coffee?”, without transcendence, life is barren of meaning.

Bostrom presents a trilemma in his simulation hypothesis: either humanity goes extinct before reaching a posthuman stage, posthumans choose not to simulate their ancestors, perhaps out of ethical restraint or philosophical humility, or we are almost certainly living in a simulation. At first glance, the argument appears logically airtight. But on closer inspection, it rests on a speculative foundation of quivering philosophical sand: that consciousness is computational and organic, that future civilizations will have both the means and the will to simulate entire worlds, and that such simulations would be indistinguishable from reality. These assumptions bypass profound questions about the nature of consciousness, the ethics of creation, and the limits of simulated knowledge. Bostrom’s trilemma appears rigorous only because it avoids the deeper question of what it means to live and die.

These views, while intellectually stimulating, shed little light on a worthwhile future. We are consigned to existence as automatons, soulless, simulated, and suspended in probability curves of resignation. They offer models, not meaning. Equations, not essence. A presence in the shadows of greater reality.

Even the guardians of spiritual tradition have begun to echo this hollow refrain. When asked about hell, a recently deceased Pope dismissed it not as fire and brimstone, but as “nothingness,” a state of absence, not punishment. Many were stunned. A civilizational lifetime of moral instruction undone in a breath. And yet, this vision is not far from where Bostrom’s simulation hypothesis lands: a world without soul, without consequence, without continuity. Whether cloaked in theology or technology, the message is the same, there is nothing beyond. The Seven Virtues and the Seven Deadly Sins have lost their traction, reduced to relics in a world without effect.

But the soul knows better. It was not made for fire, nor for oblivion. It was made to transcend, to rise beyond suffering and angst toward a higher plane of being. What it fears is not judgment, but erasure. Not torment, but the silence of meaning undone. Immortality insists on prudent upkeep.

What they overlook, or perhaps refuse to embrace, is a consciousness that exists beyond intellect, a soul that surrounds our entire being and resists a reduction to circuitry or biology. A soul that transcends blood and breath. Meaning beyond death.

This is not a new idea. Socrates understood something that modern thinkers like Musk and Bostrom have bypassed: that consciousness is not a byproduct of the body, but something prior to it, something eternal. For Socrates, the care of the soul was the highest human calling. He faced death not with fear, but with calm, believing it to be a transition, not an end or a nothingness, but a new beginning. His final words were not a lament, but a gesture of reverence: a sacrifice to Asclepius, the god of healing, as if death itself were a cure.

Plato, his student, tried to give this insight form. In his allegory of the cave, he imagined humanity as prisoners mistaking shadows for reality. The journey of the soul, for Plato, was the ascent from illusion to truth, from darkness to light. But the metaphor, while powerful, is also clumsy. It implies a linear escape, a single ladder out of ignorance. In truth, the cave is not just a place, it is a condition. We carry it with us. The shadows are not only cast by walls, but by our own minds, our fears. And the light we seek is not outside us, but within.

Still, Plato’s intuition remains vital: we are not meant to stay in the cave. The soul does not long merely for survival, it is immortal, but it needs growth, nourished by goodness and beauty, to transcend to heights unknown. A transcendence as proof, the glow of the real beyond the shadow and the veil.

In the end, the soul reverberates from within: we are not boxed inside a simulation, nor trapped in a reality that leads nowhere. Whether through reason, compassion, or spiritual awakening, the voice of wisdom has always whispered the same truth: Keep the soul bright and shiny. For beyond the shadows, beyond the veil of death, there is more. There is always more.